Micro Continuous Deployment: nginx with auto-SSL vs GitHub Webhooks for your dockerized application

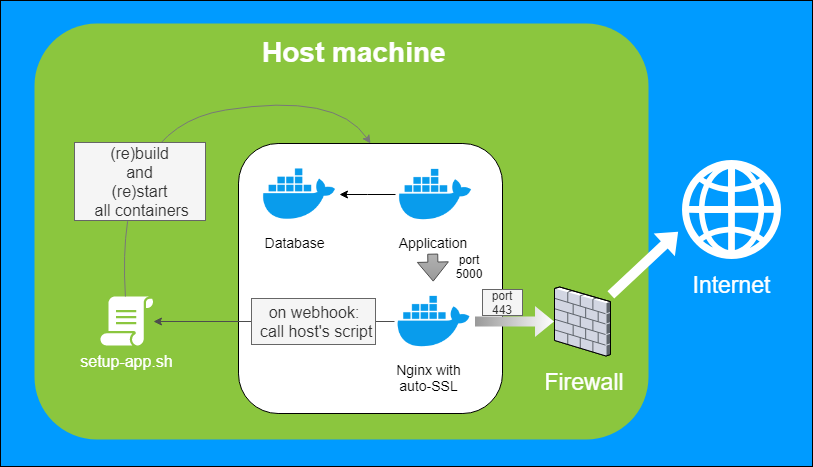

Let’s say you have a project hosted somewhere and want to publish it in a production manner. Meaning, it’s gonna get SSL. But, you also don’t want to modify your host server too much so you’d use the Docker for it, right? Aaand, want to have some mini continuous deployment where a merge action to default branch on GitHub would rebuild and restart the whole thing. Let’s make this real.

Target architecture and the problem

Because we want to separate things, the host machine is going to run such Docker containers:

- our application

- app’s database

- nginx with auto-SSL that shares the app (and all other apps) securely with the outside world

Of course, first two containers are in place, and we already have a setup-app.sh script that builds and starts the app. There is one more thing we need. To simulate the little CD spirit that would call the script automatically.

There is one issue, though: how does the dockerized nginx server execute the rebuild script on the host? We need the…

GitHub Webhook

GitHub Webhooks page documents the possible events you’d wish to subscribe for. Just remember that

By default, webhooks are only subscribed to the

pushevent.

which may not be enough for pull requests merged to the default branch. But let’s do the simple thing for now:

The push event on a default branch fetches a new code and restarts the hosted app!

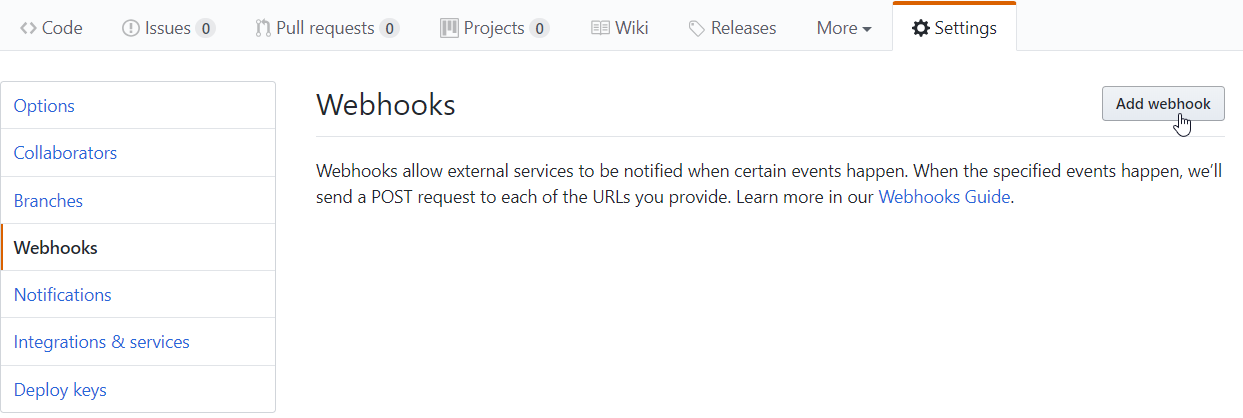

First, go to the Settings of your GitHub project, click Add webhook and suit yourself.

The SSL

So, without dockerizing anything you could just configure your nginx to react on a URL that would execute your custom shell script:

location /github_webhook {

content_by_lua_block {

ngx.header[Content-Type] = text/plain

os.execute(/bin/bash /root/setup-app.sh)

ngx.say(restarting...)

}

}

The setup-app.sh script would fetch new code from GitHub, build it, make a backup of the database, kill the old server, boot up a new one, ensure the open firewall ports (like 80 and 443) and connect your container like app + database into one virtual network. That’s what my latest app does. All that depends on your project techs and stuff, and should a black box to the rest of the article.

However, we want the auto SSL. I use the nginx-auto-ssl for that as it simplifies things greatly. Based on the SITES variable, it creates a config for nginx that would secure the connection to our app.

Let’s assume your app’s Dockerfile EXPOSEs some port and docker-compose.yml defines some default names for containers so it would automatically create Docker networks. So in the snippet below the myapp_web_1 is a name of your app’s network, more on this: https://docs.docker.com/compose/networking/

pushd .

DIR=$( cd $( dirname ${BASH_SOURCE[0]} ) && pwd )

cd $DIR

app_ip=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' myapp_web_1)

# the port that app's container configuration EXPOSEs

app_port=5000

docker run -d \

--name nginx-auto-ssl \

--restart on-failure \

-p 80:80 \

-p 443:443 \

-e ALLOWED_DOMAINS=mytodoapplication.net \

-e SITES=mytodoapplication.net=$app_ip:$app_port \

-e DIFFIE_HELLMAN=false \

-v $volume_ssl_data:/etc/resty-auto-ssl \

-v $DIR/nginx_conf.d:/etc/nginx/conf.d \

valian/docker-nginx-auto-ssl

# setup the firewall

ufw allow 80/tcp

ufw allow 443/tcp</code>

Note that you need a Docker volume (and have it’s name in $volume_ssl_data) for storing the key generated by Let’s Encrypt. It’s very important because Let’s Encrypt defines limits for key regeneration and after crossing it you may have to wait even a whole week until limitation expires for your domain.

We also want a webhook so don’t hold onto the code above. We’ll extend it in few minutes.

The Webhook

Let’s define a simple script that will wait for a moment to start the setup-app.sh.

_internals/listen_to_github_push_webhook.sh:

#!/bin/bash

DIR=$( cd $( dirname ${BASH_SOURCE[0]} ) && pwd )

docker_host_ip=$(ip -4 addr show docker0 | grep -Po 'inet \K[\d.]+')

docker_host_listen_port=9999

while [ true ]; do

echo Waiting for webhook calls...

webhook_data=$(nc -l -w 2 $docker_host_ip $docker_host_listen_port)

echo $webhook_data

#TODO: unpack data from it and check what should be executed! For now, let's just call the setup-app.sh.

cd $DIR/../..

./setup-app.sh &

done

This will launched in the background. It constantly listens for a connection on certain port using netcat (nc). When a connection appears it gets exactly one second to tell us the secret…

Based on the “secret” text this we’ll determine which app should be rebuild and restarted.

And where does the text come from? From GitHub of course! And that’s where nginx comes in.

The SSL and The Webhook

Nginx hosted inside a container would define both a proxy to application container and a webhook that would call the setup-app.sh. Webhook would read a secret code (configured on GH website) from headers and pass it to a script on host machine (outside of any Docker containers) using previously mentioned netcat (nc).

Let’s go practice. First, we have to reconfigure the basic template that’s used for every site specified in SITES variable. To do that we need to do two things:

- specify custom Dockerfile instead of directly using the image name valian/docker-nginx-auto-ssl

It will be also useful to pass host’s IP and port number for triggering script on webhook call. By default, nginx clears almost all variables, so we also have to:

- redefine the default nginx.conf to allow the DOCKER_HOST_IP and DOCKER_HOST_LISTEN_PORT variables to be passed.

_internals/Dockerfile:

FROM valian/docker-nginx-auto-ssl

COPY ./server-proxy-template.conf /usr/local/openresty/nginx/conf/server-proxy.conf

COPY ./nginx.conf /usr/local/openresty/nginx/conf/

Valian’s Dockerfile is based on nginx configuration that allows to use Lua for custom logic. We’ll use that for the location /github_webhook { … } part:

_internals/server-proxy-template.conf:

# this configuration is auto-generated based on _internals/server-proxy-template.conf

server {

listen 443 ssl http2;

server_name $SERVER_NAME;

include resty-server-https.conf;

location / {

proxy_pass http://$SERVER_ENDPOINT;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

}

# this is the new part:

location /github_webhook {

content_by_lua_block {

ngx.header["Content-Type"] = "text/plain"

local ip = os.getenv("DOCKER_HOST_IP")

local port = os.getenv("DOCKER_HOST_LISTEN_PORT")

local req_headers = ngx.req.get_headers()

local x_sig = req_headers["X-Hub-Signature"] or ""

local x_evt = req_headers["X-GitHub-Event"] or ""

local x_secret = req_headers["X-GitHub-Delivery"] or ""

local x_values = "\"" .. x_sig .. "\" \"" .. x_evt .. "\" \"" .. x_secret .. "\""

local sock = ngx.socket.tcp()

sock:connect(ip, port)

sock:settimeout(5000)

local bytes_sent, err = sock:send(x_values)

sock:close()

ngx.say("bytes sent: " .. bytes_sent)

}

}

}

The idea above is - collect three header values, join them to a string in format "sig" "evt" "secret", then send that with netcat to the _internals/listen_to_github_push_webhook.sh script.

The little thing here is the -w 1 parameter which makes sure that connection is killed internally in the OS after one second so the same port could be reused without a hindrance. It’s actually more important for UDP connections rather than TCP.

_internals/nginx.conf:

env DOCKER_HOST_IP;

env DOCKER_HOST_LISTEN_PORT;

# everything below is copied from Valian's configuration

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

include resty-http.conf;

server {

listen 80 default_server;

include resty-server-http.conf;

}

include /etc/nginx/conf.d/*.conf;

}

The final juice that boots up the nginx with auto-SSL and webhook configured, manages running the script that listens in a loop for webhook calls, and deals with the containers alone:

setup-sites.sh:

#!/bin/bash

pushd .

DIR=$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )

cd $DIR

docker_host_ip=$(ip -4 addr show docker0 | grep -Po 'inet \K[\d.]+')

docker_host_listen_port=9999

app_ip=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' myapp_web_1)

# the port that app's container configuration EXPOSEs

app_port=5000

container="nginx-auto-ssl"

docker stop $container

docker rm $container

docker build -t $container ./_internals

docker run -d \

--name $container \

--restart on-failure \

-p 80:80 \

-p 443:443 \

-e ALLOWED_DOMAINS=myappdomain.net \

-e SITES="myapp.com=$app_ip:$app_port" \

-e DIFFIE_HELLMAN=false \

-e DOCKER_HOST_IP=$docker_host_ip \

-e DOCKER_HOST_LISTEN_PORT=$docker_host_listen_port \

-v $volume_ssl_data:/etc/resty-auto-ssl \

-v $DIR/nginx_conf.d:/etc/nginx/conf.d \

$container

ip_nginx=$(docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' nginx-auto-ssl)

echo "nginx IP: $ip_nginx"

ufw allow 80/tcp

ufw allow 443/tcp

ufw allow from $ip_nginx/24 to any port $docker_host_listen_port

docker network connect myapp_default nginx-auto-ssl

# Restart listener script in case of changes inside of it.

# Watch out: setup-sites.sh may be launched by the following

# script so make sure it's not killed because the internal loop

# will die so it will not stop listening forever!

old_listener_pid=$(pidof -x ./_internals/listen_to_github_push_webhook.sh)

nohup ./_internals/listen_to_github_push_webhook.sh &>/dev/null &

#disown

if [ ! -z $old_listener_pid ]; then kill $old_listener_pid; fi

popd

As you read through the script, you should find out it’s self-explainable.

Final thoughts

Why is it a primitive Continuous Deployment, you ask? If webhook is called when nginx does not listen to it, then the event is lost. So, in essence, two successive calls in a short period will trigger the rebuild only once. It’s not that hard to solve. For instance, it could be a simple queue like mosquitto or even simpler - just a re-check of last event time after current build finishes.

Another primitive thing is the build failures. This, however, could be implemented in your setup-app.sh. Any kind of notification (email?) could be sent when something does not work. That’s up to the script, not the method overall.

However, for a simple home project it’s very lightweight solution that doesn’t require any CI/CD system. Although I’m not an expert in the Linux/bash/DevOps/servers domain, I think it’s based on well-established technologies so it’s got a high portability and doesn’t cost you either RAM (like Jenkins) or money (like a CI/CD SaaS system that’s free until it is not).

Resources

- Let’s Encrypt - where your free SSL certificate comes from

- Let’s Encrypt: Rate Limits - keep in mind those for key storage volume

- Dockerfile - here you can read about EXPOSE

- Valian/docker-nginx-auto-ssl -an easy take on securing our app with Docker and nginx + auto-SSL inside